- Pentaho data integration migrate job from series#

- Pentaho data integration migrate job from download#

- Pentaho data integration migrate job from free#

- Pentaho data integration migrate job from windows#

Now we’ve got the basics of Transformations and Jobs down - in the next part of the series I am going to show you some cool, advanced things you can do with PDI such as: quickly and easily migrating from one database to another (MySQL to Hive), Metadata Injection and running Pentaho Map Reduce jobs on a Hadoop cluster.

Pentaho data integration migrate job from free#

Search latest Data Integration Pentaho jobs openings with salary, requirements, free alerts on.

Pentaho data integration migrate job from windows#

I have no idea why it is not running in the windows command prompt using bat file.

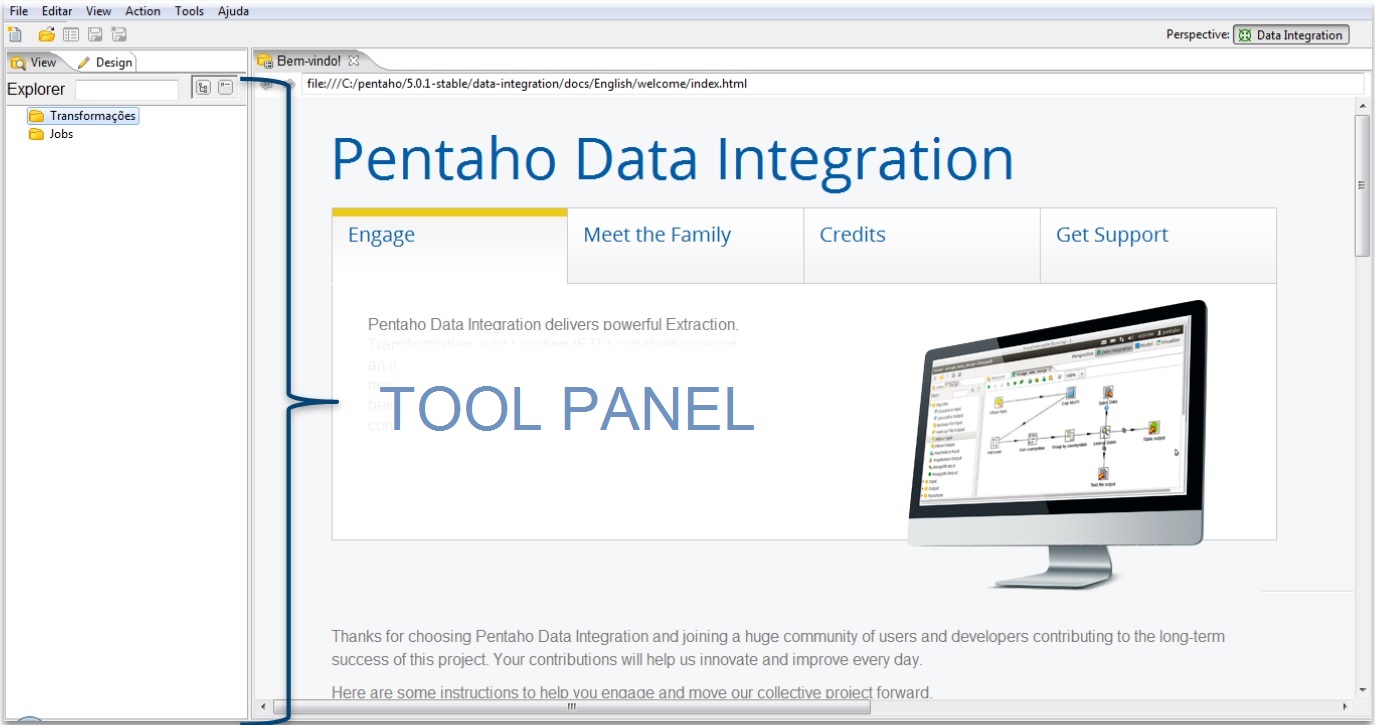

But by using Pentaho Spoon, this job is totally working fine. I created a job.bat file and when I tried to execute the job in the shell, the job is not executing at all. The following diagram shows an example of a job using the three different hop types. Apply to 5776 new Data Integration Pentaho Jobs across India. I installed PDI 6.0 and created a PDI job. Uncoditional - the next job step will always excute, regardless of what happens in the previous step - this hop is a black arrow with a padlock on it.įollow when true - the next job step attached will only execute if the previous job step executes successfully (completed with true) - this hop is green with a tickįollow when false - the next job step attached will only execute if the previous job step execution was false/unsuccessful - this hop is red with a stop icon. To launch the Pentaho Data Integration tool, in the pdi-ce-4.x.0-stabledata-integration or pdi-ce-5.0.1.A-stabledata-integration folder, double-click the Spoon.bat file, and open the required KJB file.

Pentaho data integration migrate job from download#

This enables you to migrate jobs and transformations from development to test to. BMC recommends that you download the job files in the folder in which you have unzipped the Pentaho Data Integration tool. There are 3 types of job hops that can be used: Building Open Source ETL Solutions with Pentaho Data Integration Matt. You must have a start entry in order to begin the sequence of job steps. The result object contains rows and all these rows, once a particular job entry is completed, are passed together to the next job entry, and are not streamed.īy default job entries are executed in a sequence and only rarely are they executed in parallel. Some important characteristics of Job entries are:Ī job step will pass a result object between jobs steps. Pentaho DI (PDI) is an ETL tool that allows you to visually process data using. You can also string whole jobs together in the same way as you do transformations, if you want to abstract up a level. This is Part 1 in an n part series about using the Pentaho Data Integration tool. It does a job level step, which checks if a file exists. The first rule of any job is that it must begin with a ‘Start’ step.Īs you can see in the above example, the job begins with a Start and then strings two transformations together, but inbetween them The Transformation step simply allows you to plug in a previously created transformation and join it onto another step, for example: Like transformations it uses the concept of steps and hops, however the steps are in the form of a Transformation, a Job or a job level step (maintenance style tasks). This is another reason why Jobs are used for these tasks, as unlike Transformations, they do not execute all steps in parallel. If you haven’t yet already, check out PART 1 first! JobsĪ Job allows high level steps to be strung together and is very useful for running maintenance style tasks such as verifying files or databases exists for example.ĭue to the nature of maintenance tasks, its important that they are run in a specific order Check database exists -> grab some data, you wouldn’t want these tasks performed in a different order or in parallel. A dashboard is created in the BI server, which is a single page report containing key features from Pentaho reports, to give a quick glimpse without having to go through multi paged reports.This is Part 2 of the Pentaho series. Speaking of Business Intelligence Server, it is a server application which hosts the content published from desktop applications, such as pentaho reports, data integration jobs and transformation files and also provides scheduling feature to automate regular ETL jobs. It consists of tables, charts and graphs which helps Business team to get useful insights on their data and take critical business decisions. Pentaho Report Designer is used to create business reports based on data from variety of sources. Pentaho Data Integration gives the ETL (Extract, Transform and Load) engine to integrate data from several sources, clean and filter any junk data and transform into useful format and load them to finally to desired destination file or database. Pentaho Data Integration (PDI also called as Kettle), Pentaho Report Designer are some of the tools within this platform. Pentaho Business Analytics Platform consists of collection of tools to integrate, standardize, visualize and analyze the business data.

0 kommentar(er)

0 kommentar(er)